3 Ways to perform Web Crawler for hackers

Wikipedia definition:

A Web Crawler is a type of web robot or program agent. In general, it starts with a list of addresses to visit (also called seeds). As the crawler visits these addresses, it identifies all links on the page and adds them to the list of addresses to visit. Such addresses are visited recursively according to a set of rules.

Metasploit

Using Metasploit’s msfcrawler helper module, we place the target in the rhosts variable and run the exploit.

use auxiliary/crawler/msfcrawler

set rhosts www.target.com

exploit

After executing it, we can identify that msfcrawler displayed links that it would not be possible to access manually, such as JS files and PHP files.

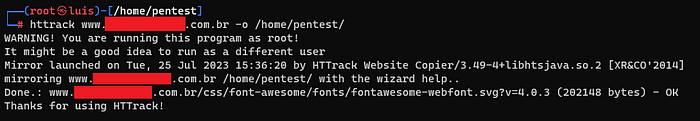

Httrack

Httrack is a free and open source tool used to download a website

to a local directory by recursively building all directories, getting HTML, images and other files from the server to your computer.

An example of use, in your terminal type:

httrack www.target.com –O /root/file/

Burp Suite

Burp Suite is a free tool from Port Swigger to automatically map websites and intercept communications, working as a proxy to verify requests.

For our scenario, we will intercept a communication with the target website as shown in the image.

After intercepting the message, it automatically maps the entire website and assembles the website structure containing the root and folders. Just access the “Target” tab and “Sitemap”.

It is also possible to add more than one site as “Target” to map, Burp Suite will automatically identify that it is another site and order it in the same way.

For more information about Hacking and Cyber Security, leave the Follow e👏 below.